1. Sampling Bias

Sampling bias occurs when the test group is not representative of the entire target audience or is not randomly selected, leading to distorted results. For example, when running an A/B testing experiment on a website with strong seasonal traffic fluctuations, user behavior may vary significantly depending on the testing period. This can negatively impact the validity and reliability of the results, making it difficult to generalize insights across the full customer base. To minimize sampling bias, ensure that test groups are sufficiently large, well-balanced, randomly assigned, and exposed to the experiment over consistent timeframes.

2. Self-Selection Bias

Self-selection bias occurs when participants voluntarily opt into a study, often resulting in a sample that differs meaningfully from the overall population. For instance, in a customer satisfaction survey, responses may primarily come from users with extreme experiences—either highly satisfied or highly dissatisfied—while neutral users remain underrepresented. This can lead to misleading conclusions by overlooking behavioral and preference differences across the broader audience. To mitigate self-selection bias, data should be collected from multiple sources and across a wider participant pool. In parallel, marketers should track and analyze multiple metrics throughout the entire conversion funnel, rather than focusing only on metrics directly impacted by the tested variable.

3. Measurement Bias

Measurement bias arises when experimental data is inaccurate, incomplete, or inconsistent. For example, during an A/B testing experiment on a landing page, technical issues may prevent certain users from seeing the correct version or from being properly tracked by analytics systems. This can result in missing or skewed data, ultimately distorting test outcomes and reducing statistical significance. To reduce measurement bias, it is critical to ensure accurate test setup, reliable tracking implementation, and high-quality data collection throughout the experiment.

4. Novelty Bias

Novelty bias occurs when users respond more positively to a new version of digital content simply because it is new, not because it delivers better performance. For example, when running an A/B testing experiment on the same ad placement to compare two creative formats—such as a static banner versus a spinner—the spinner may initially generate higher click-through rates due to increased interactivity. However, this uplift may not translate into improved conversion rates or customer retention. This suggests that users are curious about the new ad format but may not be genuinely more interested in the product or service. To avoid novelty bias, experiments should run long enough for the initial novelty effect to fade, and results should be compared against historical baseline performance.

5. Confirmation Bias

Confirmation bias occurs when analysts interpret A/B testing results in a way that reinforces their existing beliefs or expectations, rather than maintaining objectivity. For example, when testing different headline variations, a marketer may favor the version that aligns with personal preferences, even if the performance difference lacks statistical significance. This can lead to incorrect conclusions—such as false positives or false negatives—and ultimately impact optimization decisions. To reduce confirmation bias, teams should clearly define hypotheses and success criteria before launching experiments, and rely on rigorous statistical methods and objective data analysis throughout the evaluation process.

Conclusion

Overall, A/B testing is a powerful methodology for optimizing campaign performance, but results can be misleading if potential sources of bias and errors are not carefully controlled. To ensure accuracy and reliability in data analysis, businesses must design experiments thoughtfully, apply proper randomization, collect comprehensive data, and interpret results objectively.

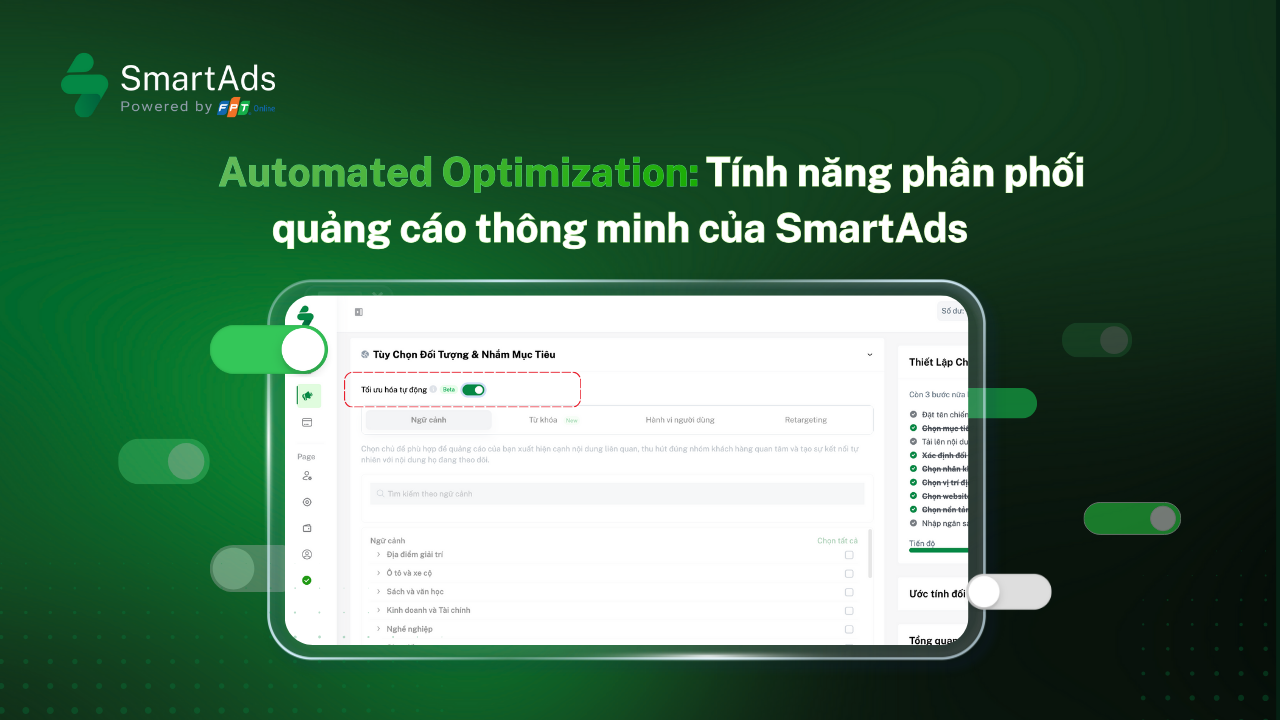

With its advertising platform across Vietnam’s leading digital publishers, SmartAds aims to deliver performance-driven advertising solutions, partnering with businesses to test A/B testing ideas effectively, optimize outcomes, and execute data-driven marketing strategies with confidence.