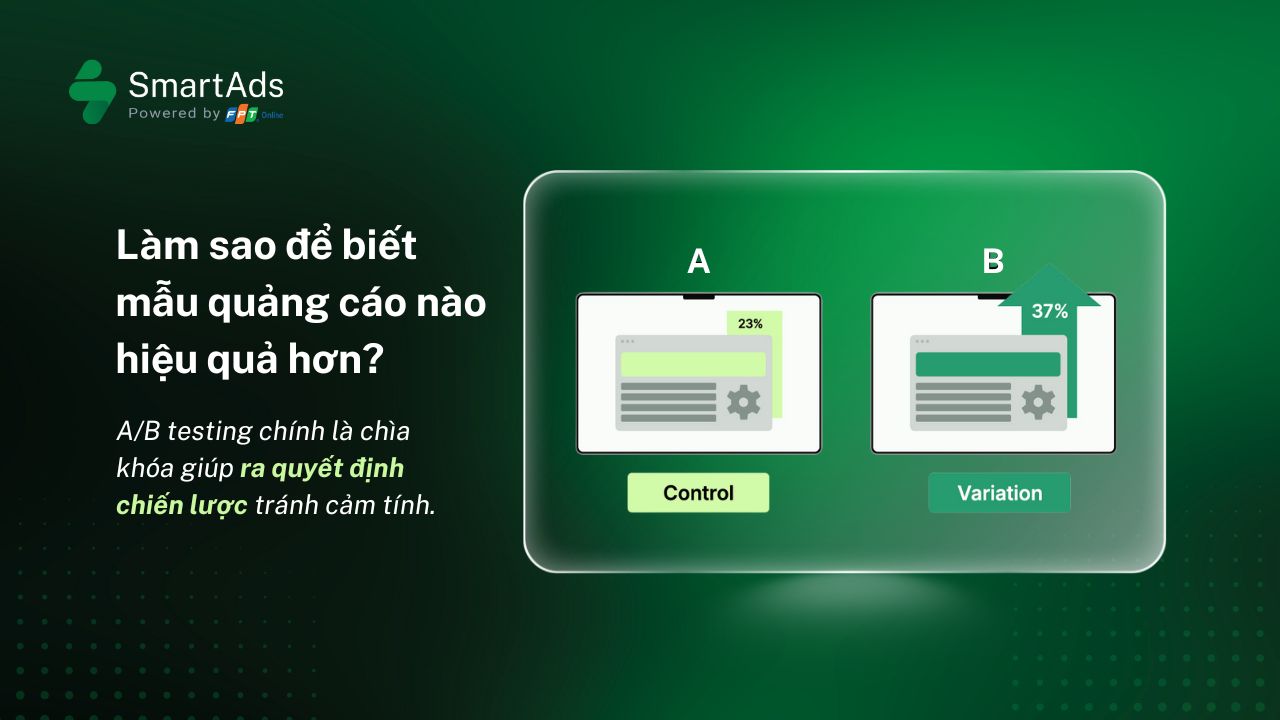

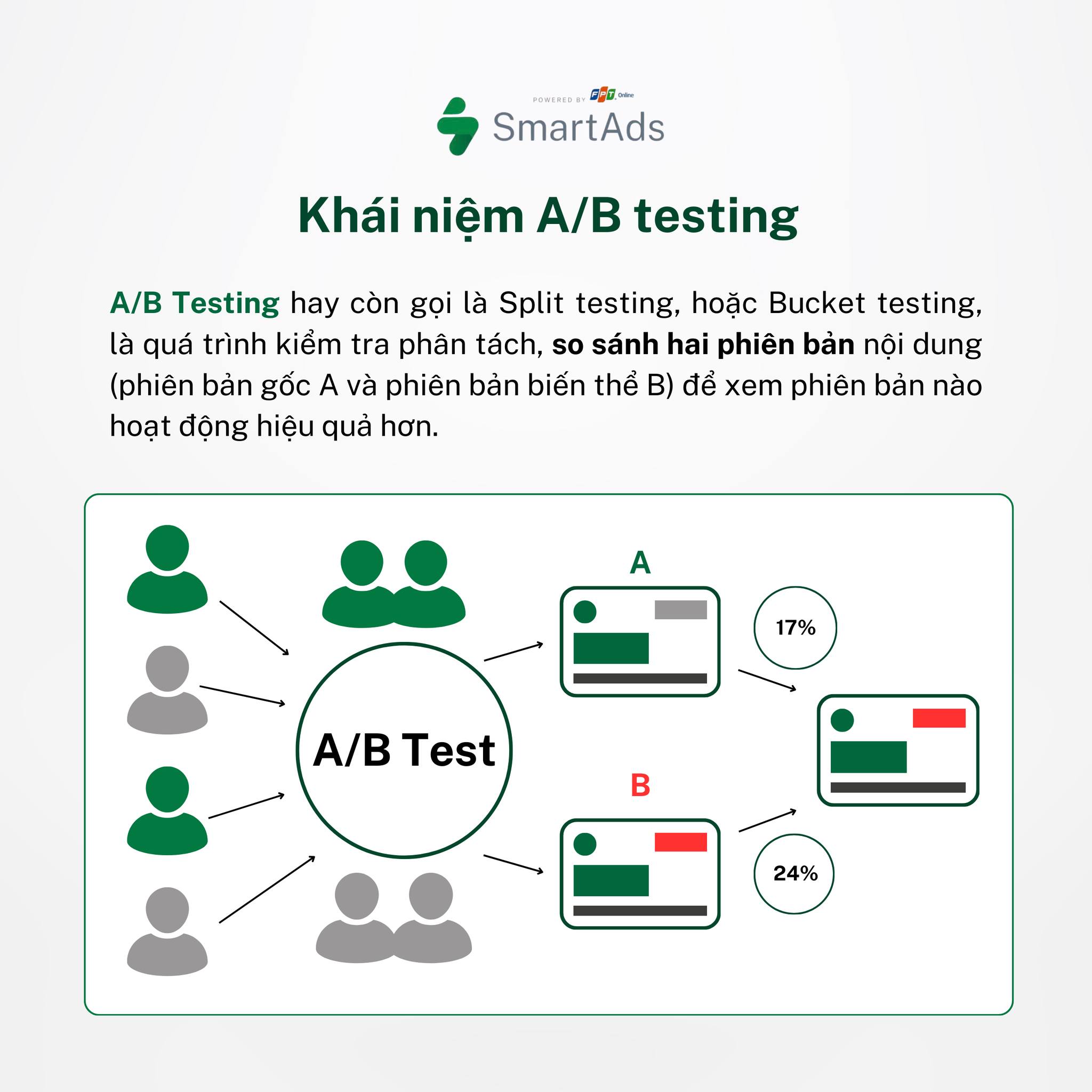

What is A/B testing? Definition and how it works

A/B testing, also known as split testing, is a method of comparing two advertising versions (A and B) by randomly dividing the audience into two groups. Group A is exposed to the original version, while Group B sees a version with only one modified element, such as the headline or visual. After a defined period, marketers analyze the data to determine which version drives higher conversions or click-through rates.

In traditional A/B testing, only one variable is changed at a time to ensure objective and reliable results. If multiple elements need to be tested simultaneously, businesses can apply multivariate testing. However, this approach requires more complex analysis and a significantly larger sample size.

Practical benefits of applying A/B testing in advertising

From a strategic perspective, implementing A/B testing delivers tangible value for businesses when evaluating and optimizing advertising performance:

Optimize Conversion Rate (CTR, CR) and ROI

The core value of A/B testing lies in its ability to accurately measure user behavior, improve conversion rate (CTR, CR), and reduce cost per acquisition (CPA) or cost per click (CPC).

Enhance User and Customer Experience

Through A/B testing, interface designs, messaging, and layouts are optimized based on real user behavior. This helps reduce bounce rate and increase overall engagement.

>>> Learn how to optimize Time-on-Site with A/B testing here

Data-Driven Decision Making

One of the biggest advantages of A/B testing is eliminating emotional bias in advertising design. Instead of relying on assumptions, marketers leverage real performance data from advertising platforms to optimize based on true customer insights.

Steps to Run an A/B Testing Advertising Experiment

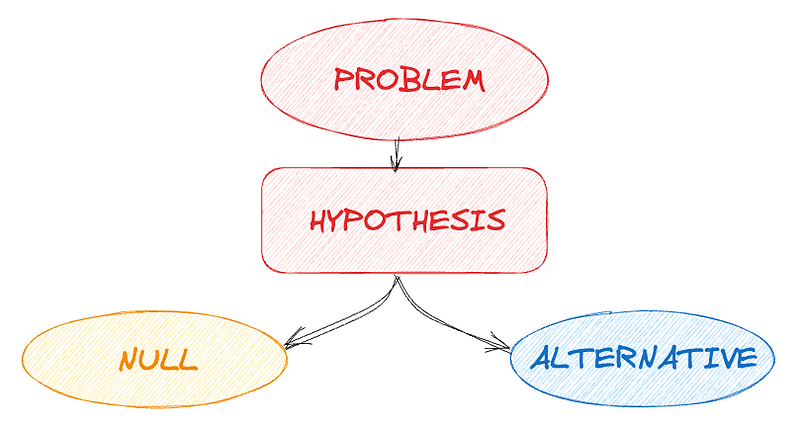

1. Define the Testing Objective

Before starting, you should rely on baseline data or market insights to clearly define the primary goal of the test. This helps determine which advertising element should be tested and which metrics will be used for measurement. This step also forms the foundation for building your testing hypothesis.

Common testing objectives include:

-

Increase click-through rate (CTR) for ad creatives

-

Improve conversion rate (CVR) on landing pages

-

Reduce cost per click (CPC) or cost per acquisition (CPA)

-

Evaluate the effectiveness of messaging, visuals, CTA, or targeting

2. Identify Variables to Test

A critical principle: change only one variable per test to accurately identify the factor driving performance differences.

Elements commonly tested in A/B testing include:

-

Headline: For example: “30% Discount Today” vs “Free Gifts for the First 100 Customers”

-

Image or video: Real people using the product vs product-only visuals

-

Body copy

-

CTA (call-to-action): For example: “Buy Now” vs “Learn More”

-

Target audience: For example: Mass audience, Remarketing, or Lookalike

-

Placement or distribution channel: For example: in-read vs outstream

-

Ad scheduling and timing

3. Set Up A/B Testing Advertising Campaigns

After selecting the testing variable, you need to set up two independent ad groups:

-

Group A: The current version or baseline

-

Group B: The modified version

Note: Ensure all other elements remain constant, budgets are evenly distributed, and testing tools are enabled if available.

4. Run the Test for Sufficient Time and Sample Size

A common mistake is ending the test too early before collecting enough data, which leads to misleading conclusions. To avoid this, follow these principles:

-

Run the test for at least 3–7 days to prevent premature algorithm optimization.

-

Ensure each group reaches the minimum sample size required for statistical significance.

Common sample size formula: n = (Z² × p × (1 − p)) / E²

Where:

-

Z: confidence level coefficient (1.96 for 95%) -

p: estimated conversion rate (e.g., 5% = 0.05) -

E: acceptable margin of error (e.g., 2% = 0.02)

Example: If you expect a 5% conversion rate and accept a ±2% margin of error, you need approximately 457 conversions per group.

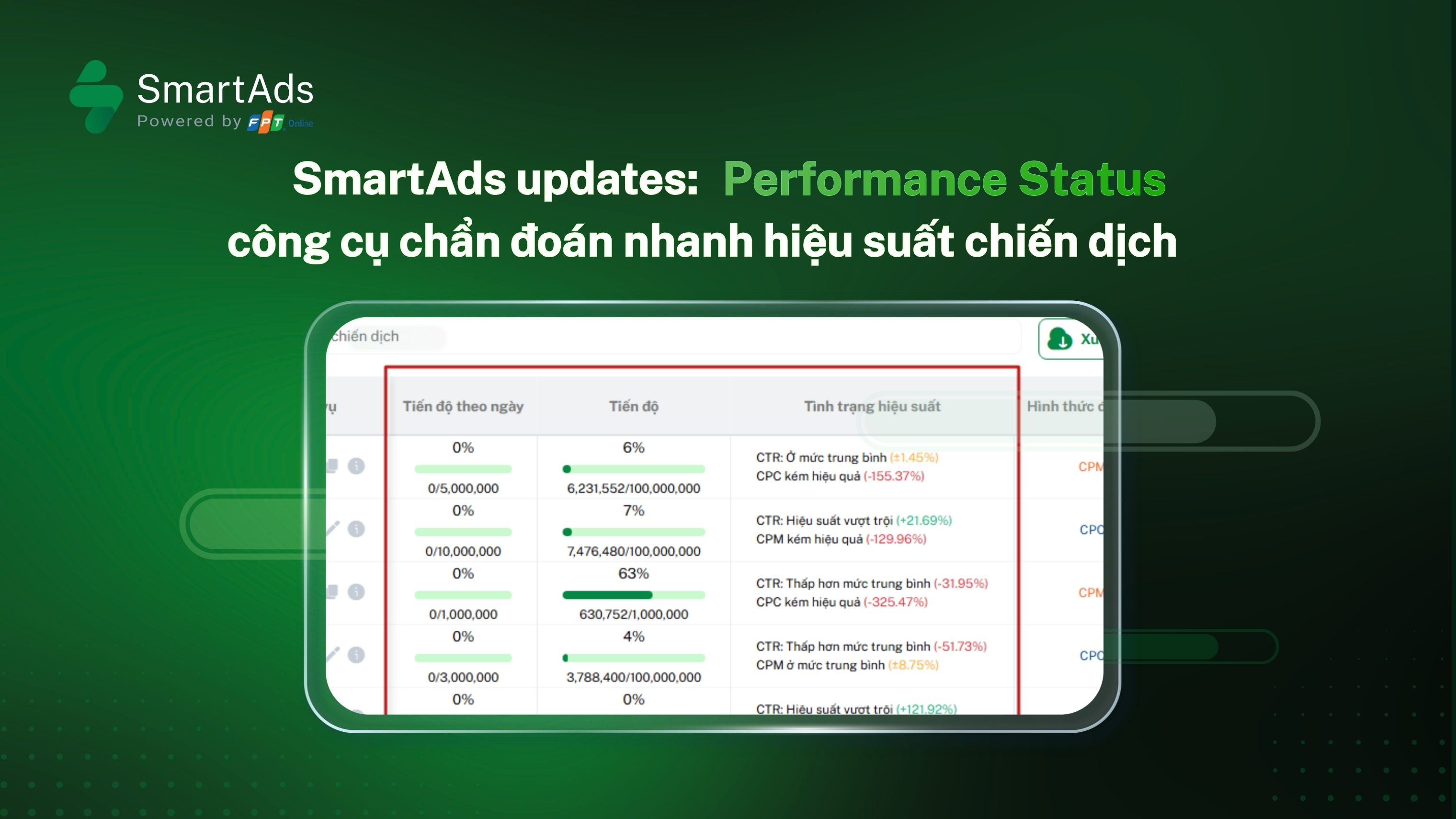

5. Analyze and Evaluate Results

Once sufficient data is collected, compare key metrics between the two groups. If a clear difference is observed, you have identified the true performance driver. At this stage, you can:

-

Keep the better-performing variant for the main campaign

-

Document test results for internal reference

-

Use insights gained to plan the next optimization test, creating a continuous improvement loop

Notes and checklist for running A/B Testing in advertising

To execute A/B testing effectively, marketers should keep the following in mind:

- Ensure sufficient sample size and run tests for a minimum of 7 days to reduce data bias and outliers.

- Change only one variable at a time to accurately identify the cause of performance shifts.

- Select appropriate tools to collect and compare data, then build a checklist to validate statistically significant differences rather than subjective opinions.

- Avoid running too many tests without proper control of independent variables, as this can compromise data accuracy.

- Testing multiple variables simultaneously makes it difficult to identify success drivers.

- Failing to define a clear hypothesis before starting the test.

- Choosing an inappropriate testing period, which undermines objectivity.

>>> Explore common A/B testing errors and biases that reduce accuracy here.

Conclusion

Applying a structured A/B testing process in advertising not only helps reduce wasted budget and improve investment efficiency, but also builds a solid foundation for sustainable conversion growth. Start with small experiments, capture meaningful insights, and iterate continuously—this is the path every high-performing marketer chooses.

About SmartAds

SmartAds, formerly known as Eclick, is developed by FPT Online—one of Vietnam’s pioneers in digital technology and media. Through continuous innovation and growth, and with a strong network of leading premium publishers, SmartAds reinforces its position as a frontrunner in elevating native advertising standards in Vietnam, while aspiring to become a trusted partner for brands on their journey to winning customer attention.

If you are looking for an advertising solution that optimizes both brand visibility and performance, don’t hesitate to create an account and experience campaign setup on SmartAds here.